database optimization

SQL performance

query tuning

cloud databases

indexing strategies

Database Performance Optimization Tips to Boost Results

Why Database Performance Matters More Than Ever

In today's fast-paced world, users demand instant results. A slow website or application can lead to frustration and abandoned transactions. This ultimately means lost revenue. This makes database performance optimization a crucial business requirement.

Imagine an e-commerce site running a flash sale. If the database can't handle the increased traffic, customers will shop elsewhere. This directly impacts profits, showcasing the importance of efficient databases.

Modern applications add another layer of complexity. They require sub-second query responses and support for thousands of concurrent users. Achieving this performance demands careful planning and optimized database strategies.

The Growing Demands on Databases

The rise of microservices, real-time analytics, and AI-driven workloads further intensifies the pressure on databases. Database performance has become essential for any data-driven business.

This shift is compounded by the move toward cloud-based databases. By 2025, over 75% of databases are expected to be on cloud platforms like Microsoft Azure or Amazon Web Services (AWS). This migration is driven by the need for scalability, flexibility, and cost efficiency.

However, cloud migration introduces new challenges. Modern applications need constant availability with minimal maintenance. Organizations that fail to adapt risk frustrated users, lost revenue, and reputational damage. For further insights, check out this resource: Optimizing SQL Server Performance: Best Practices for 2025

The Business Impact of Database Performance

Poor database performance has consequences beyond technical issues. Studies show that 40% of users abandon a website that takes more than 3 seconds to load. This translates to lost sales and customers.

Slow databases also impact internal operations. They decrease employee productivity and create inefficient workflows. Over time, underperformance can damage a company's reputation and competitiveness. You might also find this relevant: How to master best SaaS boilerplates in 2025

The Need for Proactive Optimization

Organizations must prioritize database performance optimization. This involves a proactive, not reactive, approach. Investing in the right tools, expertise, and strategies prevents costly downtime and improves user satisfaction. Focusing on efficient database management gives businesses a competitive edge.

The Hidden Costs of Poorly Optimized Databases

Slow databases are more than just a minor inconvenience for users. They can have a significant impact on a company's bottom line. Often, this impact goes unnoticed, disguised as vague "technical issues" rather than the financial drain they truly represent. For example, inefficient queries can lead to excessive consumption of cloud resources and unexpectedly high bills, directly impacting profitability.

Understanding how website speed affects your bottom line is essential. For a practical guide on boosting website performance, check out this website speed test.

Quantifying the Financial Impact

The hidden costs of poorly optimized databases extend far beyond cloud expenses. Lost productivity due to slow applications and missed revenue from abandoned transactions add to the burden. The costs associated with addressing user complaints and the potential damage to brand reputation further compound the financial strain. These seemingly small issues can quickly accumulate into substantial losses.

This problem is magnified by the ever-growing volume of data businesses handle. The global volume of data created is exploding, projected to reach over 175 zettabytes by 2025. This growth makes database performance optimization absolutely critical. Poor data quality and inefficient database management cost companies an average of $12.9 million annually. To delve deeper into the world of database optimization, explore this helpful resource on Mastering Database Optimization.

The ROI of Database Performance Optimization

The good news is that addressing these database performance issues yields a measurable return on investment (ROI). Optimized databases result in lower operational costs and increased revenue through an improved user experience, along with higher employee productivity. This creates a compelling business case for investing in database performance optimization.

Turning Performance into Competitive Advantage

Leading organizations view database performance optimization as more than just a cost-saving measure. They see it as a strategic advantage. These companies understand that fast and reliable databases are the foundation of excellent user experiences, which leads to increased customer loyalty and a larger market share. By prioritizing database performance, these companies are gaining a crucial edge in today's competitive market.

Query Optimization Techniques That Actually Work

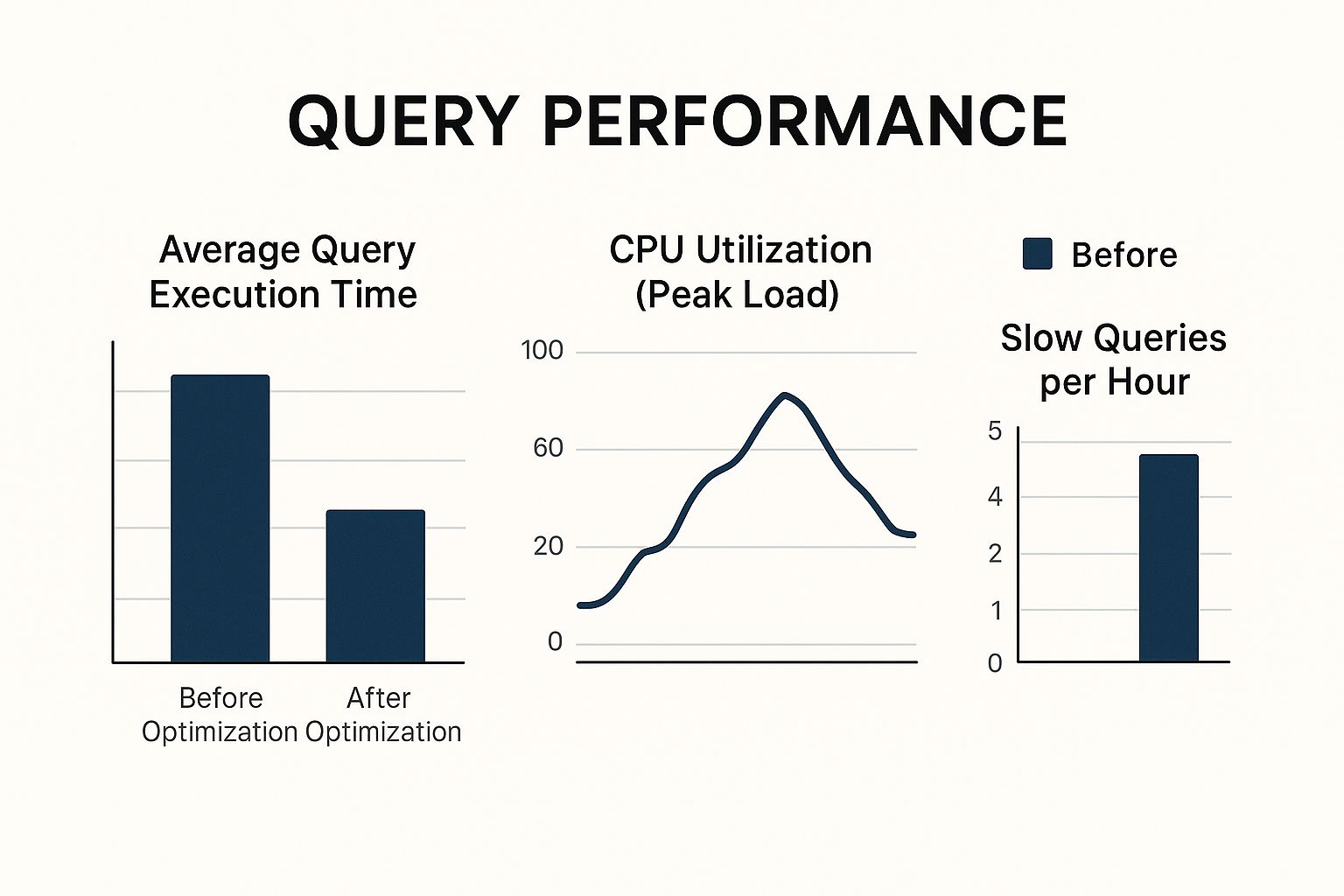

The infographic above illustrates how query optimization impacts key performance metrics. These metrics include average query execution time, CPU utilization during peak loads, and the number of slow queries per hour. Strategic query optimization can significantly reduce execution time and server load, while also minimizing slow queries.

This leads to a better user experience and more efficient use of resources.

Optimizing database queries is essential for achieving top-notch performance. While server configurations are important, writing efficient queries is where the real gains lie. This involves understanding how the database processes requests and using that knowledge to write queries that minimize resource usage.

Analyzing Query Execution Plans

A query execution plan shows how the database will process a query. Analyzing these plans can help pinpoint bottlenecks. Bottlenecks can range from inefficient joins to missing indexes. Knowing where the database spends the most time allows us to focus optimization efforts effectively. Tools like Dynatrace provide insights into query performance and execution plans across various database systems, including Microsoft SQL Server, Oracle, PostgreSQL, MySQL, and MariaDB. This helps identify and optimize resource-intensive queries.

Rewriting Inefficient Queries

Sometimes, even simple-looking queries can be inefficient. Rewriting these queries can drastically improve performance. For example, replacing a poorly performing subquery with a join or optimizing WHERE clauses can yield substantial improvements. Small changes to a query's structure can often lead to significant performance gains.

Eliminating Unnecessary Joins

Joins combine data from multiple tables, but unnecessary joins can slow queries down considerably. Think of it like searching for a book in a library. Checking every shelf is much slower than using the card catalog. Similarly, removing unnecessary joins helps the database retrieve the required data more quickly.

Leveraging Subqueries Effectively

When used correctly, subqueries are powerful tools for retrieving data. However, poorly written subqueries can cause significant performance problems. Optimizing subqueries or replacing them with joins where appropriate can make query execution much more efficient. This is similar to taking a direct route instead of a detour.

Optimizing WHERE Clauses

The WHERE clause filters data based on specific criteria. Optimizing this clause is often an easy way to improve database performance. Using more precise conditions or rearranging the order of conditions can often drastically speed up queries.

To help illustrate the impact of these different optimization techniques, let's look at the following table:

Query Optimization Techniques Impact Assessment

A comparison of different query optimization techniques showing their relative effectiveness for various database workloads.

| Optimization Technique | Implementation Complexity | Performance Impact | Best Use Cases | Limitations |

|---|---|---|---|---|

| Analyzing Query Execution Plans | Low | High | Identifying bottlenecks, understanding query behavior | Requires understanding of execution plans |

| Rewriting Inefficient Queries | Medium | High | Simplifying complex queries, replacing subqueries with joins | Can be time-consuming, requires query expertise |

| Eliminating Unnecessary Joins | Medium | High | Reducing data retrieval time, simplifying queries | Requires careful analysis of data relationships |

| Leveraging Subqueries Effectively | Medium | High | Complex data retrieval, filtering data based on aggregated values | Can be inefficient if not used correctly |

| Optimizing WHERE Clauses | Low | Medium | Filtering data efficiently | Limited impact for complex queries |

This table summarizes the various query optimization techniques discussed and their potential impact. As you can see, analyzing query execution plans and rewriting inefficient queries often offer the greatest performance improvements, while optimizing WHERE clauses is generally less complex but yields a smaller impact.

Continuous Query Improvement

Database performance optimization is an ongoing process. It requires regular monitoring and refinement. Continuously reviewing query performance, identifying slow queries, and making improvements will ensure your database runs efficiently. For example, PostgreSQL offers tunable parameters like shared_buffers, work_mem, and maintenance_work_mem to enhance performance. Avoiding full table scans and optimizing complex joins are also vital.

By using these query optimization techniques, you can significantly boost your database's performance. This results in faster applications, happier users, and a healthier bottom line. This proactive approach is key to maintaining efficiency and a competitive advantage.

Strategic Indexing: The Art and Science of Speed

After optimizing your queries, the next crucial step for database performance is indexing. Think of it like a library catalog: effective indexing helps you find the information you need quickly. A strategic approach is vital because poorly implemented indexes can actually hinder performance. For a solid foundation, start with effective data modeling techniques.

Designing Indexes for Specific Workloads

Indexes should be designed with your workload in mind. A database used primarily for reading data benefits from different indexes than one with many write operations. For read-heavy databases, a covering index can be incredibly beneficial.

Covering indexes include all the columns a query needs, eliminating the need to access the main table. This is like finding all the information you need on a library catalog card without having to retrieve the actual book.

Advanced Indexing Techniques

Beyond the basics, advanced techniques like filtered indexes and index combinations can further boost performance. Filtered indexes apply to a subset of data, making lookups faster and reducing the index size. Combining indexes can optimize complex queries that involve multiple columns.

Identifying Missing and Redundant Indexes

Identifying missing indexes is a key area for improvement. A missing index forces the database to scan the entire table, slowing down queries significantly. Imagine having to search every shelf in a library instead of using the catalog! On the other hand, redundant indexes waste resources and slow down writes. These should be identified and removed.

Maintaining Index Health

Databases are dynamic. As your data changes, so too should your indexes. Regularly monitor index usage and fragmentation. Rebuilding or reorganizing fragmented indexes ensures continued efficiency. Much like a library reorganizes its shelves periodically, maintaining index health keeps performance consistent.

Balancing Read and Write Performance

Indexing involves a trade-off. It speeds up reads but adds overhead to writes, since each write requires updating the indexes. The key is to find the right balance between over-indexing, which impacts write performance, and under-indexing, which hinders reads.

Real-World Impact of Index Optimization

Proper indexing can dramatically transform database performance. Many organizations have seen huge improvements in query response times and system throughput after implementing strategic index redesigns. This translates to a better user experience, increased productivity, and lower costs. For example, filtered indexes on a customer database could drastically improve query speeds for specific customer segments, leading to faster reporting and better customer service.

AI-Powered Optimization: Beyond Human Capabilities

Database performance optimization is no longer solely dependent on human expertise. Artificial intelligence (AI) is quickly changing how we approach this crucial task, providing capabilities that surpass traditional methods. AI-powered tools are automating intricate tuning processes, predicting potential bottlenecks, and uncovering insights that even highly experienced DBAs might miss.

Automating the DBA's Toolkit

Historically, database administrators manually tuned queries, created indexes, and managed resources. This manual approach can be time-consuming and prone to human error. AI is changing this by automating many of these tasks.

For example, machine learning algorithms can analyze query patterns and automatically create or delete indexes as required, adapting to real-time workload needs. This automation allows DBAs to focus on higher-level initiatives like schema design, security, and long-term performance strategies.

Furthermore, AI can offer proactive alerts, predicting potential bottlenecks before they affect users. This preventative approach can avoid performance problems and downtime, resulting in a smoother user experience.

From Reactive to Predictive: AI-Driven Insights

Traditional database management is frequently reactive. Administrators typically address performance problems after they've already occurred. AI transforms this model, allowing DBAs to be more proactive.

Machine learning models can analyze historical data and identify current trends to predict potential problems, allowing for optimization before an issue arises. This ability to foresee and address bottlenecks allows DBAs to maintain optimal database performance proactively.

It also frees up valuable time and resources to focus on more strategic activities, such as proactive strategy and database security, instead of constantly putting out fires.

Real-World Applications of AI in Database Optimization

AI-powered database tools are already being used in practical scenarios. Major cloud providers like Microsoft Azure and Google BigQuery are incorporating AI features into their database services.

For example, Azure SQL Database's Automatic Tuning service uses machine learning to optimize query execution plans and manage indexes automatically. Similarly, Google BigQuery uses AI to optimize query routing and allocate resources efficiently. You can learn more about query optimization with AI in this article: AI-driven query optimization.

These AI capabilities enable cloud databases to self-optimize, minimizing the need for manual adjustments. This is particularly helpful in environments where workloads change frequently, as the AI can adapt to shifting demands automatically. The benefits include faster and more reliable database performance, reduced operational costs, and more time for businesses to concentrate on innovation.

Before we delve into the future of database optimization, let's take a look at the current landscape of available AI-powered tools.

The following table provides a comparison of some leading solutions:

AI-Powered Database Optimization Tools Comparison

A detailed comparison of leading AI-driven database optimization solutions available in the market

| Tool/Platform | Key Features | Supported Database Types | Automation Level | Pricing Model | User Ratings |

|---|---|---|---|---|---|

| (Hypothetical) Tool A | Automated indexing, query optimization, anomaly detection | MySQL, PostgreSQL, SQL Server | High | Subscription-based | 4.5/5 |

| (Hypothetical) Tool B | Performance monitoring, predictive scaling, resource allocation | Oracle, MongoDB, Cassandra | Medium | Usage-based | 4.0/5 |

| (Hypothetical) Tool C | Query rewriting, workload forecasting, security analysis | PostgreSQL, Amazon Redshift | Medium | Tiered subscription | 3.5/5 |

This table summarizes the key features, supported database types, level of automation, pricing, and user ratings of three hypothetical AI-driven database optimization tools. As you can see, each tool offers a different set of functionalities and supports various database types. Choosing the right tool depends on your specific needs and requirements.

The Future of Database Optimization

While AI is not a perfect solution, it represents a significant advancement in database performance optimization. Current challenges include the need for extensive datasets to train accurate models and the difficulty in understanding the rationale behind AI-generated recommendations.

However, ongoing research and development efforts are tackling these limitations. The future of database optimization will likely involve even greater use of AI, with increasingly sophisticated algorithms able to manage more complex situations and offer deeper insights into database performance. This evolution will allow organizations to achieve new heights in database performance and efficiency, opening up new possibilities for innovation powered by data.

Cloud Database Optimization: Different Rules, Better Results

Cloud databases operate differently than traditional on-premises solutions. This means that database performance optimization requires a fresh perspective. This section explores the unique challenges and opportunities presented by major cloud platforms like AWS, Azure, and Google Cloud.

Leveraging Cloud-Specific Features

Cloud providers offer features designed to boost database performance. Serverless scaling dynamically adjusts resources based on demand, ensuring consistent performance even during peak traffic. Imagine a restaurant seamlessly adding tables and staff during the lunch rush.

Cloud providers also offer specialized instance types tailored for database workloads. Memory-optimized instances, for example, can dramatically improve read-heavy applications. Managed services handle routine tasks like backups and updates, freeing your team to focus on optimization.

Right-Sizing Resources for Optimal Performance and Cost

A key benefit of the cloud is the flexibility to scale resources. Right-sizing means choosing the correct resources for your workload to achieve a balance between performance and cost. Over-provisioning leads to wasted spending, while under-provisioning creates performance bottlenecks. Finding the right balance maximizes your cloud investment. For more on database platforms, you might be interested in this comparison: Supabase vs. Firebase.

Effective Monitoring in Distributed Environments

Cloud databases often operate in distributed environments, adding complexity to monitoring. Robust monitoring tools are essential for tracking performance across multiple instances and identifying bottlenecks. They provide visibility into key metrics like latency, throughput, and resource utilization. This data is vital for identifying areas for improvement and maintaining peak performance.

Addressing Cloud-Specific Challenges

Cloud environments present unique challenges for database performance. Network latency can affect response times, especially across multiple regions. Multi-tenancy, where multiple users share resources, can also impact performance if not managed effectively. Strategies like caching and connection pooling can mitigate these challenges.

A Framework for Cloud Database Optimization

Effective cloud database optimization requires a comprehensive framework. This framework should include:

- Leveraging cloud-specific features: Make the most of serverless scaling, specialized instance types, and managed services.

- Right-sizing resources: Find the balance between performance and cost by selecting appropriate resources.

- Implementing effective monitoring: Track key metrics across your distributed environment.

- Addressing cloud-specific challenges: Develop strategies to mitigate latency and multi-tenancy issues.

By following this framework, you can ensure consistent performance, optimize costs, and adapt to changing workloads. This approach helps maximize the benefits of cloud databases while minimizing the risks. Careful planning and utilization of cloud-specific resources allow you to focus on growth and innovation, rather than infrastructure management.

Building a Sustainable Optimization Culture

Database performance optimization isn't a one-time fix. It’s an ongoing process, much like tending a garden. Consistent effort yields the best results. This means building a sustainable optimization culture within your organization. This section outlines how leading organizations build and maintain database performance programs that deliver continuous improvement.

Establishing Performance Baselines

Before you can improve, you need to understand your current performance. Establishing performance baselines is crucial. This involves measuring key metrics such as query response times, throughput, and resource utilization. These baselines serve as your benchmark for measuring the effectiveness of any optimization efforts. It's akin to tracking your fitness – you need a starting point to gauge your progress.

Comprehensive Monitoring: Catching Issues Early

Comprehensive monitoring acts like a security system for your database. Real-time monitoring tools, such as Datadog, can alert you to performance issues before they affect users. This proactive approach minimizes downtime and user frustration, crucial for maintaining peak performance and stability.

Scalable Optimization Workflows

As your database grows, your optimization processes must scale accordingly. Create standardized workflows for identifying, analyzing, and resolving performance bottlenecks. This ensures consistency and efficiency, allowing your team to handle increasing complexity without feeling overwhelmed. You might be interested in: How to master API versioning best practices.

Developing Team Capabilities

Investing in your team’s skills is a cornerstone of long-term success. Provide training on query optimization, indexing strategies, and performance monitoring tools. A skilled team can swiftly identify and resolve performance issues, empowering them to take ownership of database performance. This upfront investment yields substantial returns over time.

Setting Realistic Performance Targets

While ambitious goals are important, setting realistic performance targets is equally crucial. Unrealistic goals can lead to frustration and burnout. Collaborate with stakeholders to define achievable goals that align with business objectives. This approach fosters a sense of accomplishment and motivates the team for continuous improvement.

Prioritizing Optimization Initiatives

Not every optimization task carries the same weight. Prioritize initiatives that deliver the biggest impact by focusing on the most critical queries and bottlenecks. This targeted approach maximizes the return on your optimization efforts, similar to concentrating your marketing spend on the most effective campaigns.

Measuring Outcomes That Matter

Measuring the results of your optimization work is essential. Track key metrics and demonstrate the positive impact of your efforts. This could include showcasing how optimization has reduced costs, improved user experience, or increased revenue. Communicating these results to stakeholders reinforces the value of database performance optimization.

Knowledge Sharing and Documentation

Effective knowledge sharing is vital for long-term success. Encourage team members to document their optimization strategies and share their findings. A central repository for performance-related documentation makes knowledge readily accessible, preventing redundant work and fostering continuous learning.

Building a Performance-Focused Culture

Sustainable optimization ultimately requires a cultural shift. Foster a culture where database performance is a shared responsibility. This means everyone, from developers to business stakeholders, understands the importance of efficient databases. This shared understanding ensures ongoing attention to database performance, leading to a cycle of continuous improvement.

Ready to launch your next AI-powered project? AnotherWrapper provides the tools and resources to build and deploy AI applications quickly and efficiently. Skip the repetitive setup and focus on product innovation with AnotherWrapper. Get started today!

Fekri